DeepSeek AI and Indian IT: Our view on the impact

Pivot from brute capex to cost-efficient AI platforms could benefit Indian IT

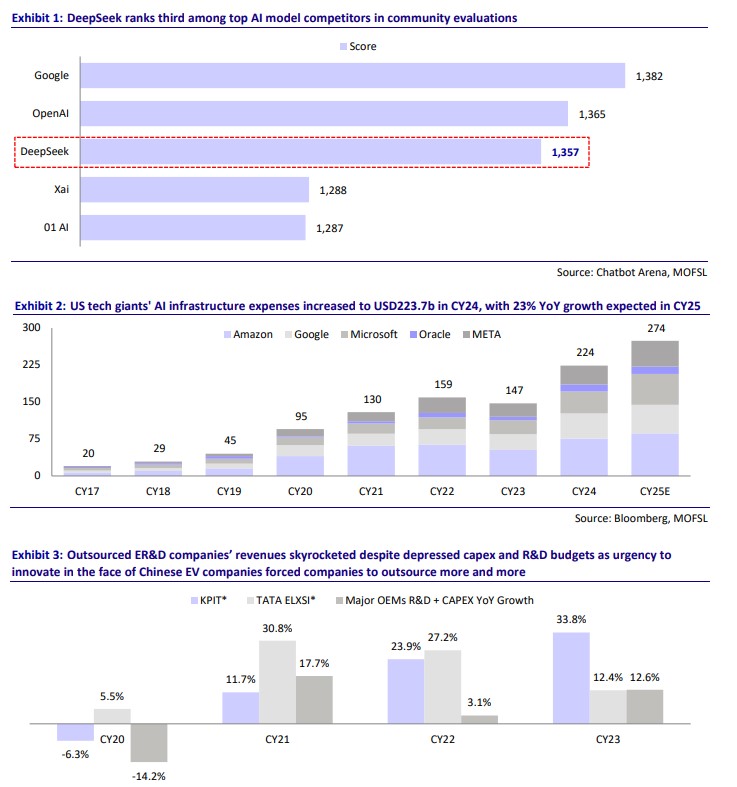

DeepSeek, a Chinese AI startup, has developed the R1 model, which rivals leading AI reasoning models such as OpenAI’s O1. Using a Mixture-of-Experts (MoE) architecture, R1 activates only 37 billion of its 671 billion parameters during processing, significantly reducing computational costs and energy consumption while maintaining top-tier performance. Remarkably, DeepSeek achieved this with a development cost of around USD6m (although this is being debated), defying industry norms, which take about 10x the price to achieve similar results. Further, by making R1 fully open-source, DeepSeek has not only increased access to cutting-edge AI but also intensified global competition, pushing U.S. companies to rethink their strategies and investments. While most stock prices for most chip makers have reacted negatively as this implies much lower compute than earlier anticipated, we believe this could shift focus from capex to cost efficient AI platforms, possibly benefitting Indian IT.

GenAI and Cloud: How does Indian IT make the most of this?

Services spending generally seems to follow the big-tech capex cycle (just like during the cloud adoption phase), and we could see something similar with AI. Back then, IaaS was merely a starting point, with hyperscalers differentiating themselves through PaaS and SaaS offerings.

Similarly, LLM may lose its edge as the primary moat. Just as PaaS and SaaS, built around basic compute infrastructure, became the true kingmakers, interfaces built around LLMs are likely to become kingmakers.

Indian IT can play a significant role in this evolution by driving platform engineering and outsourced engineering capabilities, enabling enterprises to design, build, and scale these interfaces effectively. This could position Indian IT as a key player in the low-cost GenAI wave.