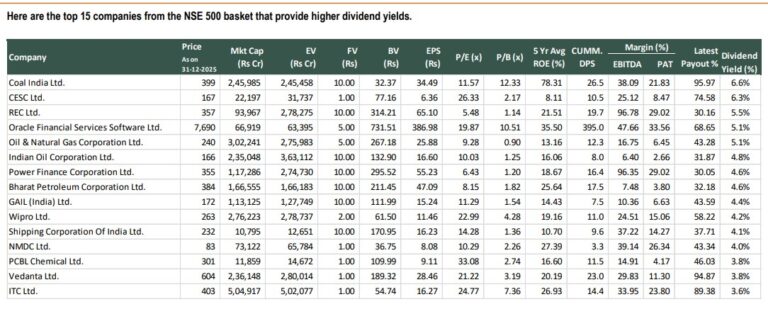

top 15 companies from the NSE 500 basket that provide higher dividend yields

Arjun

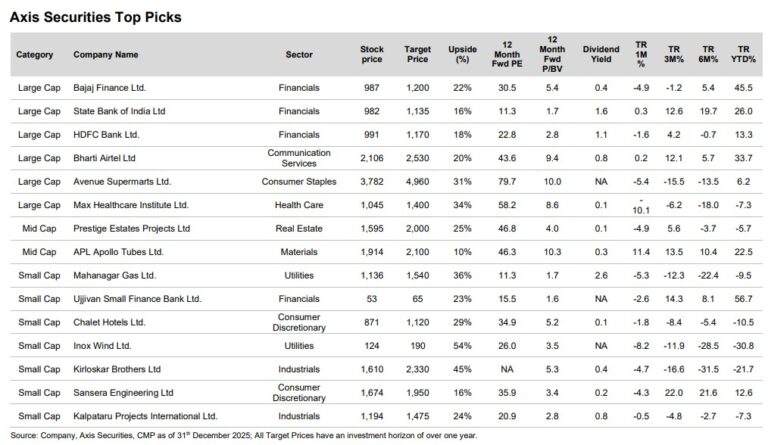

We maintain our Top Picks recommendations unchanged for the month as we continue to...

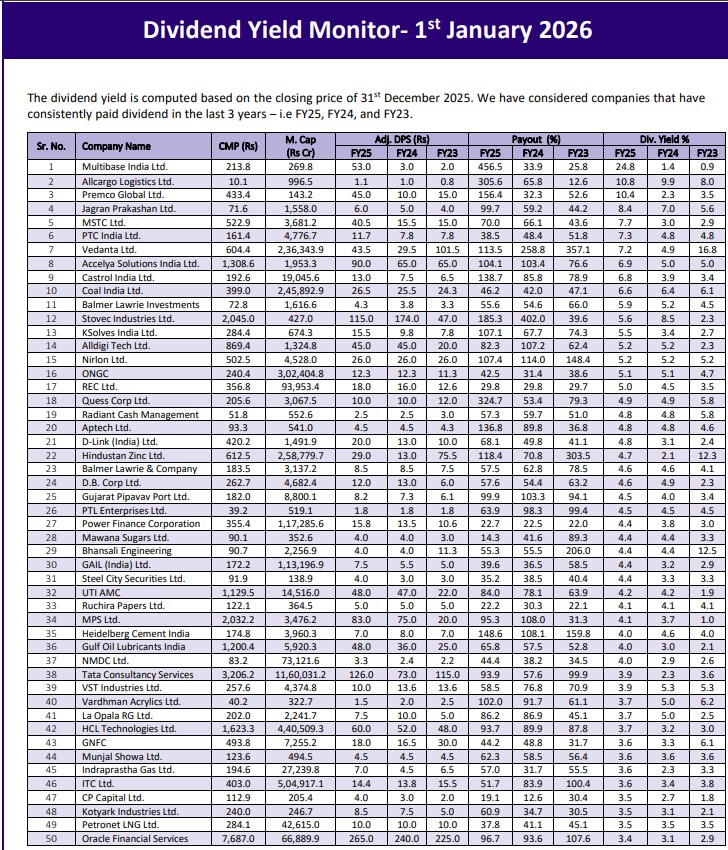

Multibase India Ltd has paid Rs 53 per share as interim dividend in Nov’24

We expect V-Mart to deliver a robust ~18% revenue CAGR over FY25-28, driven by...

Management reiterated its focus on optimising topline mix to yield superior GMV-revenue conversion and...

Going forward, the company has outlined a clear strategy to maintain its leadership position...

The company has multiple triggers & trading at a steep discount to its large...

MIDWESTL's net debt stood at INR2.2b, translating into a Net Debt/EBITDA of 1.3x as...

The hospitality portfolio is also expected to grow to 3,300 keys by FY30

The Indian dredging industry has stringent pre-qualification norms on execution track record, revenue thresholds...